Tensorflow Chessbot - Predicting chess pieces from images by training a single-layer classifier¶

Other IPython Notebooks for Tensorflow Chessbot:

- Computer Vision to turn a Chessboard image into chess tiles - Blog post #1

- Programmatically generating training datasets

- Predicting chess pieces from images by training a single-layer classifier (This notebook) - Blog post #2

- Chessboard Convolutional Neural Network classifier

In this notebook we'll train a tensorflow neural network to tell what piece is on a chess square. In the previous notebook we wrote scripts that parsed input images which contained a chessboard into 32x32 grayscale chess squares.

# Init and helper functions

import tensorflow as tf

import numpy as np

import PIL

import urllib, cStringIO

import glob

from IPython.core.display import Markdown

from IPython.display import Image, display

import helper_functions as hf

import tensorflow_chessbot

np.set_printoptions(precision=2, suppress=True)

Let's load the tiles in for the training and test dataset, and then split them in a 90/10 ratio

# All tiles with pieces in random organizations

all_paths = np.array(glob.glob("tiles/train_tiles_C/*/*.png")) # TODO : (set labels correctly)

# Shuffle order of paths so when we split the train/test sets the order of files doesn't affect it

np.random.shuffle(all_paths)

ratio = 0.9 # training / testing ratio

divider = int(len(all_paths) * ratio)

train_paths = all_paths[:divider]

test_paths = all_paths[divider:]

# Training dataset

# Generated by programmatic screenshots of lichess.org/editor/<FEN-string>

print "Loading %d Training tiles" % train_paths.size

train_images, train_labels = hf.loadFENtiles(train_paths) # Load from generated set

# Test dataset, taken from screenshots of the starting position

print "Loading %d Training tiles" % test_paths.size

test_images, test_labels = hf.loadFENtiles(test_paths) # Load from generated set

train_dataset = hf.DataSet(train_images, train_labels, dtype=tf.float32)

test_dataset = hf.DataSet(test_images, test_labels, dtype=tf.float32)

Cool, lets look at a few images in the training set

# Visualize a couple tiles

for i in np.random.choice(train_dataset.num_examples, 5, replace=False):

#for i in range(train_dataset.num_examples):

#if hf.label2Name(train_dataset.labels[i]) == 'P':

#print "%d: Piece(%s) : Label vector: %s" % (i, hf.label2Name(train_dataset.labels[i]), train_dataset.labels[i])

print "%d: Piece(%s)" % (i, hf.label2Name(train_dataset.labels[i]))

hf.display_array(np.reshape(train_dataset.images[i,:],[32,32]))

Looks good. Now that we've loaded the data, let's build up a simple softmax regression classifier based off of this beginner tutorial on tensorflow.

x = tf.placeholder(tf.float32, [None, 32*32])

W = tf.Variable(tf.zeros([32*32, 13]))

b = tf.Variable(tf.zeros([13]))

y = tf.nn.softmax(tf.matmul(x, W) + b)

y_ = tf.placeholder(tf.float32, [None, 13])

cross_entropy = -tf.reduce_sum(y_*tf.log(y))

train_step = tf.train.GradientDescentOptimizer(0.001).minimize(cross_entropy)

# train_step = tf.train.AdamOptimizer(0.01).minimize(cross_entropy)

init = tf.initialize_all_variables()

sess = tf.Session()

sess.run(init)

N = 6000

print "Training for %d steps..." % N

for i in range(N):

batch_xs, batch_ys = train_dataset.next_batch(100)

sess.run(train_step, feed_dict={x: batch_xs, y_: batch_ys})

if ((i+1) % 500) == 0:

print "\t%d/%d" % (i+1, N)

print "Finished training."

correct_prediction = tf.equal(tf.argmax(y,1), tf.argmax(y_,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, "float"))

print "Accuracy: %g\n" % sess.run(accuracy, feed_dict={x: test_dataset.images, y_: test_dataset.labels})

Looks like it memorized everything from the datasets we collected, let's look at the weights to get an idea of what it sees for each piece.

Weights¶

print "Visualization of Weights as negative(Red) to positive(Blue)"

for i in range(13):

print "Piece: %s" % hf.labelIndex2Name(i)

piece_weight = np.reshape(sess.run(W)[:,i], [32,32])

hf.display_weight(piece_weight,rng=[-0.2,0.2])

Cool, you can see the shapes show up within the weights. Let's have a look at the failure cases to get a sense of what went wrong.

mistakes = tf.where(~correct_prediction)

mistake_indices = sess.run(mistakes, feed_dict={x: test_dataset.images,

y_: test_dataset.labels}).flatten()

guess_prob, guessed = sess.run([y, tf.argmax(y,1)], feed_dict={x: test_dataset.images})

print "%d mistakes:" % mistake_indices.size

for idx in np.random.choice(mistake_indices, 5, replace=False):

a,b = test_dataset.labels[idx], guessed[idx]

print "---"

print "\t#%d | Actual: '%s', Guessed: '%s'" % (idx, hf.label2Name(a),hf.labelIndex2Name(b))

print "Actual:",a

print " Guess:",guess_prob[idx,:]

hf.display_array(np.reshape(test_dataset.images[idx,:],[32,32]))

It looks like it's been learning that pieces have black borders, and since this pieceSet didn't, and it was a small part of the training set, it just fails and thinks we're looking at blank squares, more training data! From the label probabilities, it did a reasonable job of thinking the pieces were white, and their second best guesses tended to be close to the right answer, the blank spaces just won out.

Also, lets look at several random selections, including successes.

for idx in np.random.choice(test_dataset.num_examples,5,replace=False):

a,b = test_dataset.labels[idx], guessed[idx]

print "#%d | Actual: '%s', Guessed: '%s'" % (idx, hf.label2Name(a),hf.labelIndex2Name(b))

hf.display_array(np.reshape(test_dataset.images[idx,:],[32,32]))

Manual validation via screenshots on reddit¶

We'll eventually build a training/test/validation dataset of different proportions in one go, but for now, lets build a wrapper that given an image, returns a predicted FEN

validate_img_path = 'chessboards/reddit/aL64q8w.png'

img_arr = tensorflow_chessbot.loadImage(validate_img_path)

tiles = tensorflow_chessbot.getTiles(img_arr)

# See the screenshot

display(Image(validate_img_path))

# see one of the tiles

print "Let's see the 5th tile, corresponding to F1"

hf.display_array(tiles[:,:,5])

validation_set = np.swapaxes(np.reshape(tiles, [32*32, 64]),0,1)

guess_prob, guessed = sess.run([y, tf.argmax(y,1)], feed_dict={x: validation_set})

print "First 5 tiles"

for idx in range(5):

guess = guessed[idx]

print "#%d | Actual: '?', Guessed: '%s'" % (idx, hf.labelIndex2Name(guess))

hf.display_array(np.reshape(validation_set[idx,:],[32,32]))

Oh my, that looks correct, let's generate a FEN string from the guessed results, and view that side by side with the screenshot!

# guessed is tiles A1-H8 rank-order, so to make a FEN we just need to flip the files from 1-8 to 8-1

pieceNames = map(lambda k: '1' if k == 0 else hf.labelIndex2Name(k), guessed) # exchange ' ' for '1' for FEN

fen = '/'.join([''.join(pieceNames[i*8:(i+1)*8]) for i in reversed(range(8))])

print "FEN:",fen

# See our prediction as a chessboard

display(Markdown("Prediction: [Lichess analysis](http://www.lichess.org/analysis/%s)" % fen))

display(Image(url='http://www.fen-to-image.com/image/%s' % fen))

# See the original screenshot we took from reddit

print "Actual"

display(Image(validate_img_path))

A perfect match! Awesome, at this point even though we have enough to make predictions from several lichess boards (not all of them yet) and return a result. We can build our reddit chatbot now.

Predict from image url¶

Let's wrap up predictions into a single function call from a URL, and test it on a few reddit posts.

def getPrediction(img):

"""Run trained neural network on tiles generated from image"""

# Convert to grayscale numpy array

img_arr = np.asarray(img.convert("L"), dtype=np.float32)

# Use computer vision to get the tiles

tiles = tensorflow_chessbot.getTiles(img_arr)

if tiles is []:

print "Couldn't parse chessboard"

return ""

# Reshape into Nx1024 rows of input data, format used by neural network

validation_set = np.swapaxes(np.reshape(tiles, [32*32, 64]),0,1)

# Run neural network on data

guess_prob, guessed = sess.run([y, tf.argmax(y,1)], feed_dict={x: validation_set})

# Convert guess into FEN string

# guessed is tiles A1-H8 rank-order, so to make a FEN we just need to flip the files from 1-8 to 8-1

pieceNames = map(lambda k: '1' if k == 0 else hf.labelIndex2Name(k), guessed) # exchange ' ' for '1' for FEN

fen = '/'.join([''.join(pieceNames[i*8:(i+1)*8]) for i in reversed(range(8))])

return fen

def makePrediction(image_url):

"""Given image url to a chessboard image, return a visualization of FEN and link to a lichess analysis"""

# Load image from url and display

img = PIL.Image.open(cStringIO.StringIO(urllib.urlopen(image_url).read()))

print "Image on which to make prediction: %s" % image_url

hf.display_image(img.resize([200,200], PIL.Image.ADAPTIVE))

# Make prediction

fen = getPrediction(img)

display(Markdown("Prediction: [Lichess analysis](http://www.lichess.org/analysis/%s)" % fen))

display(Image(url='http://www.fen-to-image.com/image/%s' % fen))

print "FEN: %s" % fen

Make Predictions¶

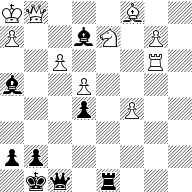

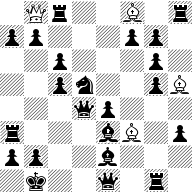

All the boilerplate is done, the model is trained, it's time. I chose the first post I saw on reddit.com/chess with a chessboard (something our CV algorithm can do also): https://www.reddit.com/r/chess/comments/45inab/moderate_black_to_play_and_win/ with an image url of http://i.imgur.com/x6lLQQK.png

And awaayyy we gooo...

makePrediction('http://i.imgur.com/x6lLQQK.png')

Fantastic, a perfect match! It was able to handle the highlighting on the pawn movement from G2 to F3 also.

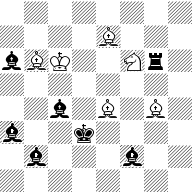

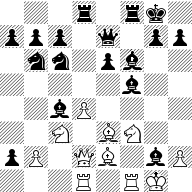

Now just for fun, let's try an image that is from a chessboard we've never seen before! Here's another on reddit: https://www.reddit.com/r/chess/comments/45c8ty/is_this_position_starting_move_36_a_win_for_white/

makePrediction('http://i.imgur.com/r2r43xA.png')

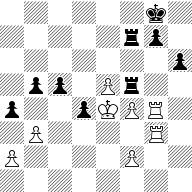

Hah, it thought the black pawns (on A3, B2, C4, and F2) were black bishops. Same for the white pawns. This would be a pretty bad situation for white. But amazingly it predicted all the other pieces and empty squares correctly! This is pretty great, let's look at a few more screenshots taken lichess. Here's https://www.reddit.com/r/chess/comments/44q2n6/tactic_from_a_game_i_just_played_white_to_move/

makePrediction('http://i.imgur.com/gSFbM1d.png')

Perfect match, as expected, when the validation images are based off of what the model trains, it'll do great, but if we use images from chess boards we haven't trained on, we'll see lots of mistakes. Mistakes are fun, lets see some.

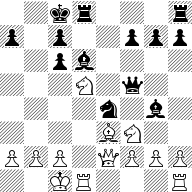

Trying with non-lichess images¶

makePrediction('http://imgur.com/oXpMSQI.png')

Ouch, it missed most of them there, the training data didn't contain images from this site, which looks somewhat like chess.com, need more DATA!

makePrediction('http://imgur.com/qk5xa6q.png')

makePrediction('http://imgur.com/u4zF5Hj.png')

makePrediction('http://imgur.com/CW675pw.png')

makePrediction('https://i.ytimg.com/vi/pG1Uhw3pO8o/hqdefault.jpg')

makePrediction('http://www.caissa.com/chess-openings/img/siciliandefense1.gif')

makePrediction('http://www.jinchess.com/chessboard/?p=rnbqkbnrpPpppppp----------P----------------R----PP-PPPPPRNBQKBNR')

Ouch, tons of failures, interesting replacements, sometimes it's a missing piece, sometimes it's a white rook instead of a black king, or a bishop instead of a pawn, how interesting.